Unplugging the Future of Intelligence

Casey Handmer does not see the world the way you do.

At E1 Ventures, we spend a lot of time looking into infrastructure and energy, and we recently published a deep dive on orbital datacenters. From time to time, I also come across articles that I would like to share with readers of our Substack, and Terraform Industries recently published a blog that is absolutely worth your time. The piece argues that the only way to scale AI compute is to completely bypass the archaic electrical grid in favor of direct-current, solar-plus-battery “islands.” While Western utilities are currently flat-footed, trapped in regulatory amber, China is already playing this endgame at a staggering scale - pumping out a full terawatt of solar panels annually and pushing stationary battery prices below $70/kWh in 2025. At that price point, the traditional gas turbine no longer makes sense.

Casey Handmer, the founder of Terraform Industries and a polymathic refugee from the halls of NASA’s Jet Propulsion Laboratory, has spent the last few years becoming the unofficial architect of a new kind of industrialism. He is the engineer’s engineer. His latest thesis is a declaration of war against the 19th-century infrastructure that is currently strangling the 21st-century’s greatest ambition: Artificial Intelligence.

To understand his vision, you have to understand the sheer, grinding stupidity of how a modern data center works.

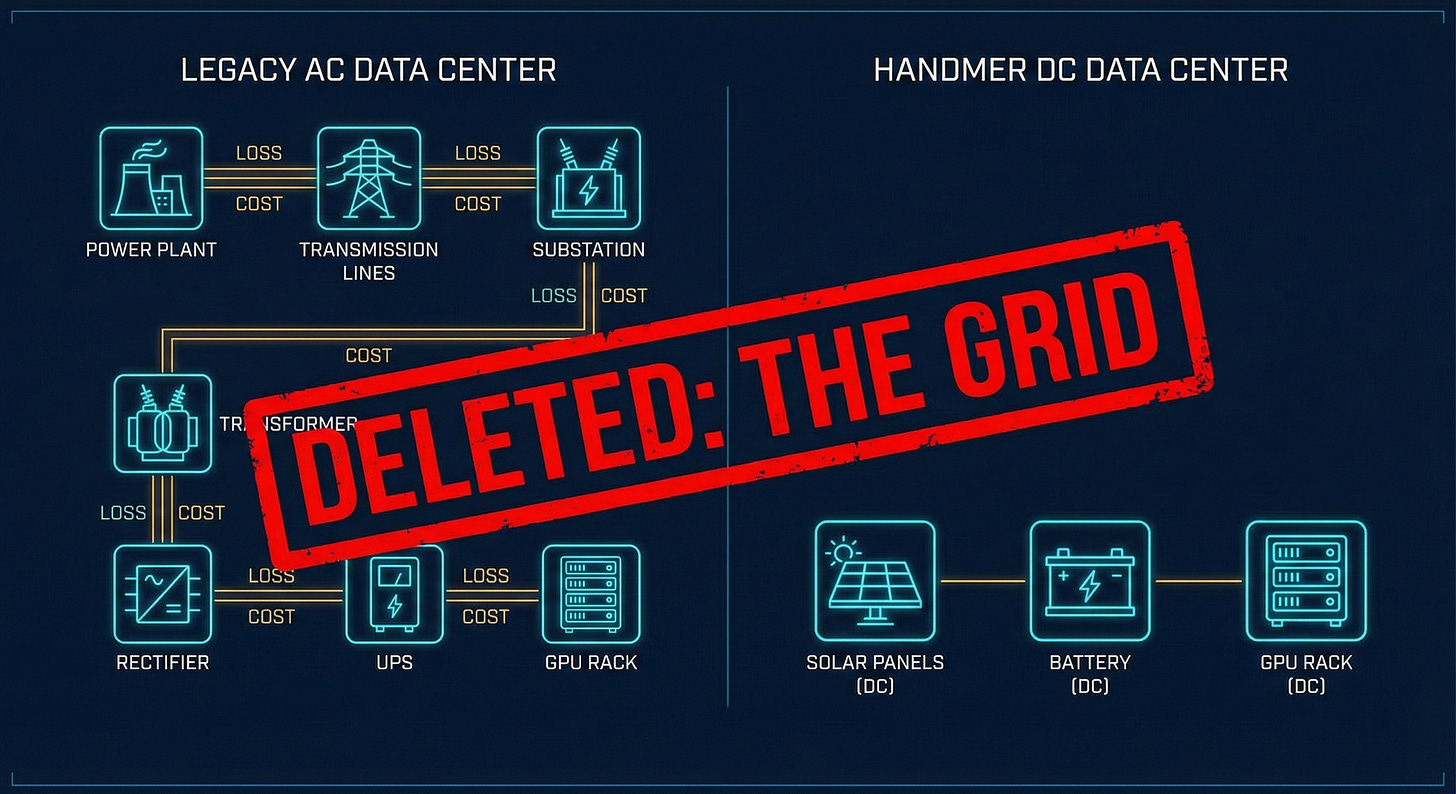

Right now, if you want to train a massive language model, you need tens of thousands of GPUs. They operate on low-voltage direct current (DC). But the world around them is built on high-voltage alternating current (AC).

We take power from a distant plant, step it up to massive AC voltages to move it across the country, step it down at a substation, move it to a data center, and then use massive, expensive, heat-spewing power electronics to convert it back into DC so the chip can actually use it. In this process, efficiency is bled out at every turn. We build massive transformers, inverters, and rectifiers. We deal with the “hum” of the 60Hz grid. It is a logistical nightmare that adds billions to the cost and, more importantly, years to the construction timeline.

The AI boom has hit a wall, and that wall is the power grid. If you want a gigawatt of power today, the utility company will laugh at you. They’ll tell you to come back in 2032 after they’ve conducted seven environmental impact studies and upgraded three thousand miles of copper wire.

The response from the new school of hardware industrialism is a classic piece of Silicon Valley iconoclasm: Delete.

In the world of hardcore engineering (the world of SpaceX or Terraform) the most powerful tool in the shed is the deletion of requirements.

The “Direct Current Data Center” is the ultimate expression of this. The proposal is deceptively simple: Pave the desert with solar panels. Connect those panels directly to batteries. Connect those batteries directly to the GPU racks.

No grid. No AC converters. No gas turbines. No 200-mile transmission lines.

A solar panel is a slice of silicon that absorbs photons and drives electrons uphill. A GPU is a slice of silicon that regulates current flow. They are a natural match. By operating the entire system on high-voltage DC (around 1000V), you can use the same electrons hundreds of times in series, slashing the need for expensive copper. You remove the “moving parts” of the energy world. A gas turbine is a temperamental, high-maintenance beast that requires a supply chain of fuel and specialized technicians. A solar panel just sits there. It has no moving parts. It asks for nothing but the sun.

But critics immediately point to the obvious flaw: The sun goes down.

This is where the engineering brilliance shines. In the legacy world, a data center must have “five nines” of reliability (99.999% uptime). This is a cult-like obsession that requires massive backup generators and redundant everything.

But for AI training, this is a multi-billion dollar mistake. AI training is a “flexible load.” A GPU doesn’t care if it works at 100% capacity at 2:00 PM and 30% capacity at 2:00 AM, as long as it gets its work done over the course of the month. The model introduces a “Governor” - a software layer that regulates the GPU’s power consumption based on the state of the battery.

The physics here are elegant. GPU power consumption is proportional to the clock frequency multiplied by the square of the voltage. By throttling the clock speed by just a tiny fraction, say, 3%, you can reduce power consumption by nearly 10%.

Instead of the data center going “dark” when the battery runs low, the “Governor” simply slows the thinking. It rations the spicy electrons to ensure the system never actually crashes. Simulations show that this approach can achieve 99.7% utilization without ever needing a single drop of natural gas or a connection to the grid. It’s better to think a little slower for an hour than to stop thinking entirely.

In the AI era, the only metric that matters is tokens per dollar.

The data shows a “bifurcation” in the energy market. On one side, you have the old world: pure gas power. It’s cheap if you don’t care about emissions, but it’s hard to scale because nobody can build turbines fast enough. On the other side is the new world: pure solar and battery.

Between 2024 and 2026, the price of batteries plummeted. Chinese manufacturing giants began shipping integrated battery storage for under $70 per kilowatt-hour. At that price point, the math flips. The moment the cost of a battery and a solar panel drops below 10% of the cost of a gas turbine, the turbine becomes a liability. Under current assumptions, it is actually cheaper to delete the gas system entirely than to keep it as a backup. The complexity of the hybrid system eats the savings.

The scale of what is being proposed is, frankly, insane.

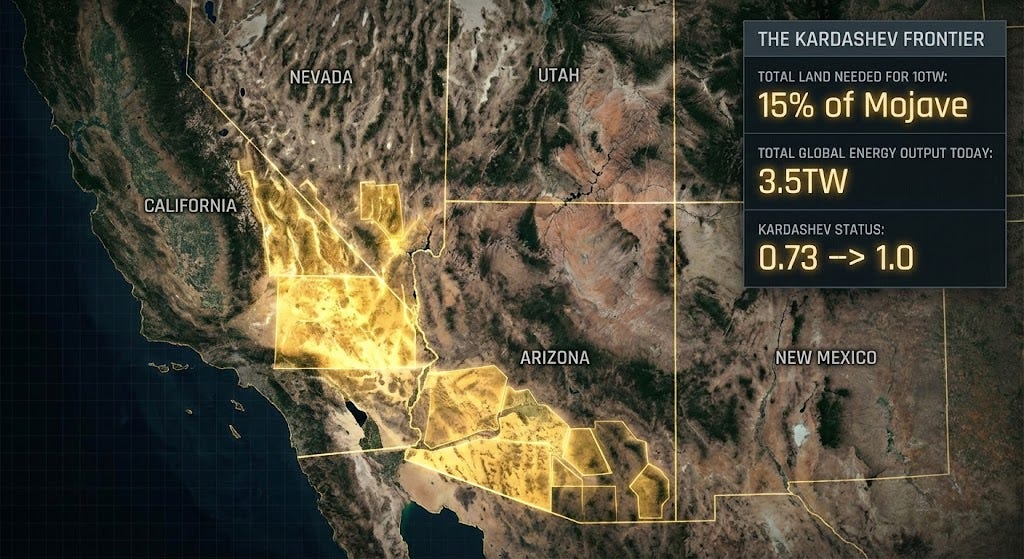

To power a gigawatt-scale AI data center, you need about 15,000 acres of solar panels. That’s a lot of glass. But the United States has 150 million acres of unpopulated desert west of the Mississippi.

If we paved that land, we wouldn’t just be powering AI; we’d be generating 10 Terawatts of power. That is more than the total global electricity generation of the entire human race today.

This is where the vision shifts from “Silicon Valley startup” to “Kardashev Level 1 Civilization.” These DC data centers are the “seed” of a new energy abundance. For ten months of the year, these solar farms will be massively over-supplied with power. That “excess” power can be given away for near-zero cost to neighboring communities or used to power machines that pull CO2 from the air to create synthetic fuels.

However, just when you think the idea is grounded in the dirt of the Mojave, it looks up.

One of the most provocative sections of the thesis involves “Space-Based Inference.” If the regulatory hurdles on Earth, i.e, the permitting, the environmental lawsuits, the local NIMBYs, become too much, we simply take the GPUs to orbit.

SpaceX’s Starship is the enabler here. If you can launch a gigawatt of compute into a sun-synchronous orbit (SSO), you get 24/7 sunlight. No batteries needed. No night-time. No “Governor.”

“Space AI has a unified regulatory regime,” Handmer notes dryly. “The vacuum is a harsh mistress, but she doesn’t require a three-year environmental impact study to install a rack.”

While the radiation of space degrades GPUs faster and the thermal management is a nightmare, the “utility” of a watt of power in space is a trillion times higher than the value of a watt used to heat a home. It is the ultimate application of the “High Ground.”

In this new “Direct Current” world, the only limit to human progress is how much of the planet we are willing to pave with the future.

Views expressed in posts, including articles, podcasts, videos, and social media, are those of the individual E1 Ventures personnel quoted in them and do not necessarily reflect the views of E1 Ventures, LLC or its affiliates. The posts are not directed to any current or prospective investors. They do not constitute an offer to sell or a solicitation of an offer to buy any securities and may not be used or relied upon in evaluating the merits of any investment.

The material available here, as well as on any associated distribution platforms or public E1 Ventures social media accounts and sites, should not be interpreted as investment, legal, tax, or other professional advice. You should consult your own advisers regarding legal, business, tax, and related matters concerning any investment. Any projections, estimates, forecasts, targets, or opinions contained in these materials are subject to change without notice. They may differ or be contrary to opinions expressed by others. Any charts or data included in these materials are for informational purposes only and should not be used as the basis for any investment decision. Certain information contained here has been obtained from third-party sources. While such information is believed to be reliable, E1 Ventures has not independently verified it and makes no representation regarding its accuracy or suitability for any particular situation. Some posts may include third-party advertisements. E1 Ventures has not reviewed such advertisements and does not endorse any of the content contained in them. All posts speak only as of the date indicated.

Nothing contained on Substack or on associated content distribution platforms should be construed as an offer to purchase or sell any security or interest in any pooled investment vehicle sponsored, discussed, or mentioned by E1 Ventures personnel. Nothing here should be interpreted as an offer to provide investment advisory services. Any offer to invest in an E1 Ventures–managed vehicle will be made separately and only by means of the respective fund’s confidential offering documents. Those documents must be reviewed in their entirety and are made available only to individuals or entities that meet specific qualifications under federal securities laws. These investors, which include only qualified purchasers, are considered capable of evaluating the merits and risks of potential investments.

There can be no assurances that E1 Ventures’ investment objectives will be achieved or that its investment strategies will be successful. Any investment in a vehicle managed by E1 Ventures involves significant risk, including the possible loss of the entire amount invested. Any investments or portfolio companies described or referred to here are not representative of all investments made by E1 Ventures, and there can be no assurance that such investments will be profitable or that future investments will achieve comparable results. Past performance of E1 Ventures’ investments, pooled vehicles, or strategies is not necessarily indicative of future results. Some investments, including certain publicly traded assets, may not be listed if the issuer has not granted permission for public disclosure.

E1 Ventures may invest in various tokens or digital assets for its own account. In doing so, E1 Ventures acts in its own financial interest. It does not represent the interests of any other token holder and has no special role in managing or overseeing any such projects. E1 Ventures does not undertake to remain involved in any project beyond its position as an investor.

I have a preprint doing the technoeconomics for this exact topic. https://www.researchsquare.com/article/rs-8272920/v1

Good to see how some things are coming together for you, Ana. A month ago you were writing about orbital data centers, and now xAI and SpaceX are merging to build them. I genuinely thought the whole idea was pure sci-fi.

Clearly, I need to start taking Casey’s ideas more seriously 😄